RigNeRF: Fully Controllable Neural 3D Portraits

ShahRukh Athar, Zexiang Xu, Kalyan Sunkavalli, Eli Shechtman and Zhixin Shu

TL;DR RigNeRF enables control over facial expressions, head-pose and viewing direction of portrait neural radiance fields.

Abstract

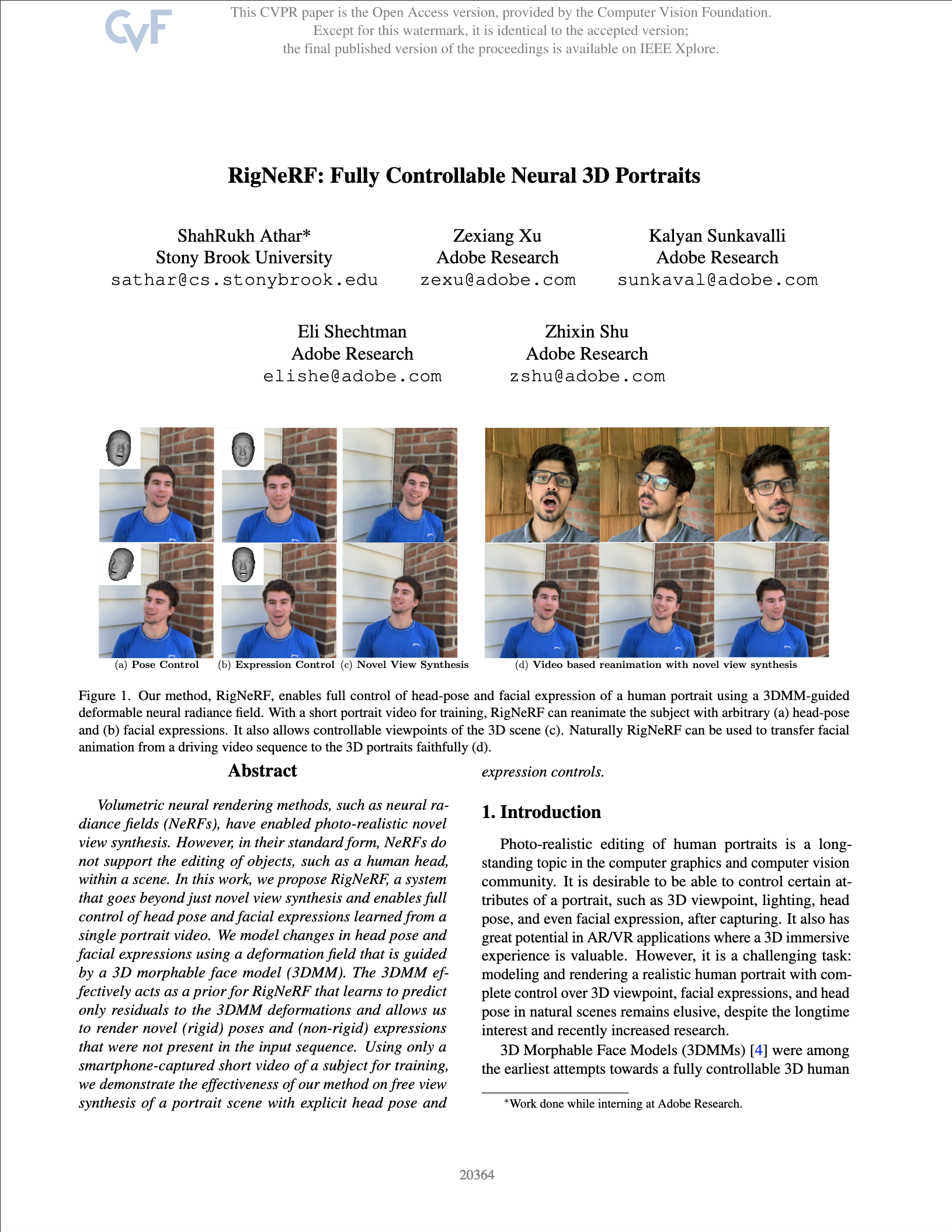

Volumetric neural rendering methods, such as neural radiance fields (NeRFs), have enabled photo-realistic novel view synthesis. However, in their standard form, NeRFs do not support the editing of objects, such as a human head, within a scene. In this work, we propose RigNeRF, a system that goes beyond just novel view synthesis and enables full control of head pose and facial expressions learned from a single portrait video. We model changes in head pose and facial expressions using a deformation field that is guided by a 3D morphable face model (3DMM). The 3DMM effectively acts as a prior for RigNeRF that learns to predict only residuals to the 3DMM deformations and allows us to render novel (rigid) poses and (non-rigid) expressions that were not present in the input sequence. Using only a smartphone-captured short video of a subject for training, we demonstrate the effectiveness of our method on free view synthesis of a portrait scene with explicit head pose and expression controls.

Some Results

Below we show reanimation results across different subjects.

Citation

@inproceedings{athar2022rignerf,

title={RigNeRF: Fully Controllable Neural 3D Portraits},

author={Athar, ShahRukh and Xu, Zexiang and Sunkavalli, Kalyan and Shechtman, Eli and Shu, Zhixin},

booktitle = {Computer Vision and Pattern Recognition (CVPR)},

year = {2022}

}