Rig3DGS: Creating Controllable Portraits from Casual Monocular Videos

Alfredo Rivero*, ShahRukh Athar*, Zhixin Shu and Dimitris Samaras

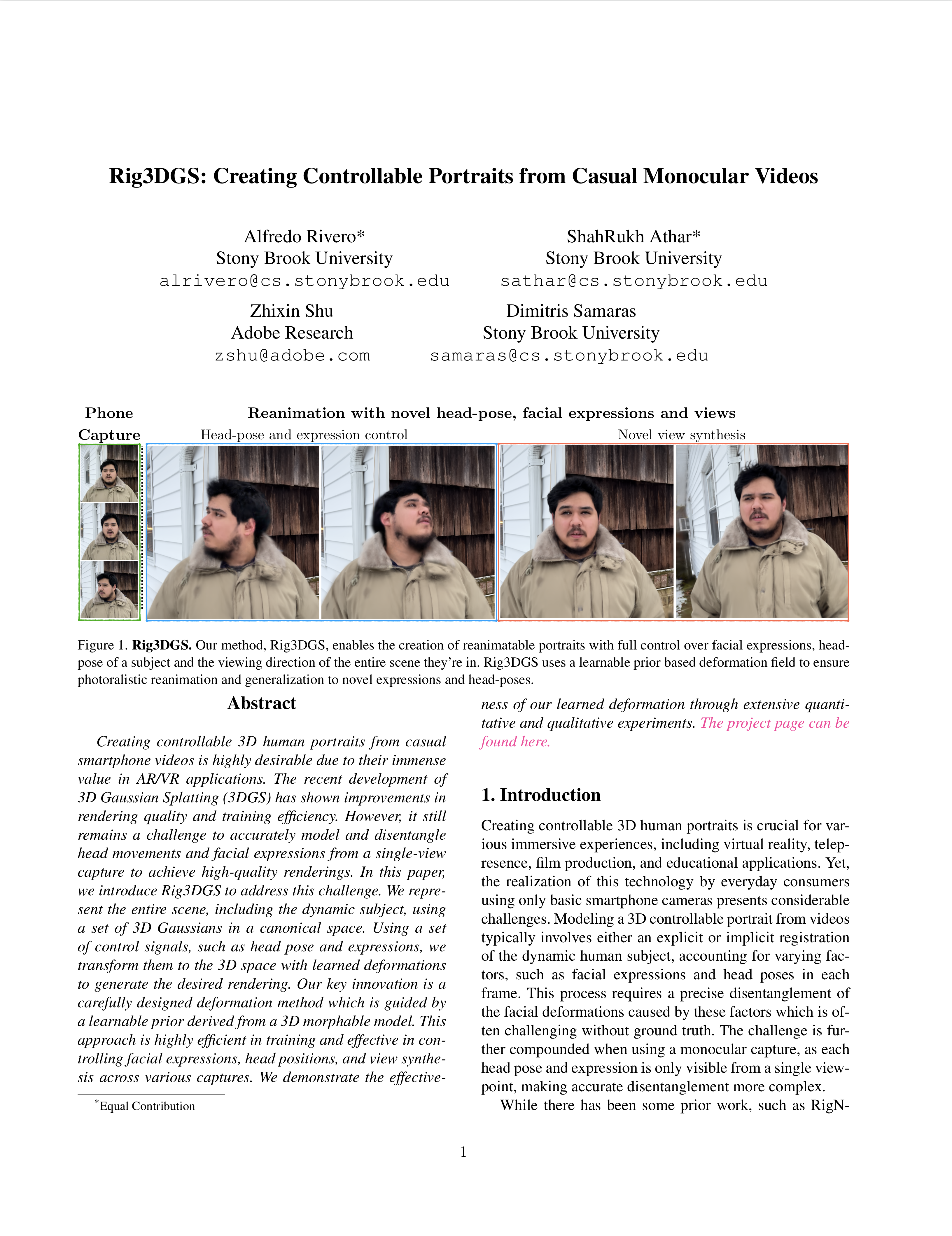

TL;DR From monocular video captures, Rig3DGS rigs 3D Gaussian Splatting to enable the creation of reanimatable portrait videos with control over facial expressions, head-pose and viewing direction.

Abstract

Creating controllable 3D human portraits from casual smartphone videos is highly desirable due to their immense value in AR/VR applications. The recent development of 3D Gaussian Splatting (3DGS) has shown improvements in rendering quality and training efficiency. However, the challenge remains in accurately modeling and disentangling head movements and facial expressions from a single-view capture to achieve high-quality renderings. In this paper, we introduce Rig3DGS to address this challenge. We represent the entire scene, including the dynamic subject, using a set of 3D Gaussians in a canonical space. Using a set of control signals, such as head pose and expressions, we transform them to the 3D space with learned deformations to generate the desired rendering. Our key innovation is a carefully designed deformation method which is guided by a learnable prior derived from a 3D morphable model. This approach is highly efficient in training and effective in controlling facial expressions, head positions, and view synthesis across various captures. We demonstrate the effectiveness of our learned deformation through extensive quantitative and qualitative experiments.

Some Results

Below we show reanimation results across different subjects with changing facial expressions, head-pose and viewing directions

Citation

@misc{rivero2024rig3dgs,

title={Rig3DGS: Creating Controllable Portraits from Casual Monocular Videos},

author={Alfredo Rivero and ShahRukh Athar and Zhixin Shu and Dimitris Samaras},

year={2024},

eprint={2402.03723},

archivePrefix={arXiv},

primaryClass={cs.CV}

}